[GRPO Explained] DeepSeekMath: Pushing the Limits of Mathematical Reasoning in Open Language Models

25:36

DeepSeek R1 Theory Overview | GRPO + RL + SFT

53:02

Scaling LLM Test-Time Compute Optimally can be More Effective than Scaling Model Parameters (Paper)

1:19:37

Paper: DeepSeek-R1: Incentivizing Reasoning Capability in LLMs via Reinforcement Learning

25:05

Ranking Paradoxes, From Least to Most Paradoxical

56:09

DeepSeek DeepDive (R1, V3, Math, GRPO)

37:17

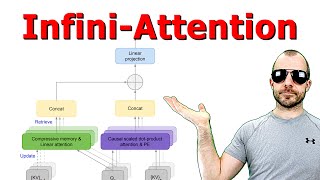

Leave No Context Behind: Efficient Infinite Context Transformers with Infini-attention

27:22

AI Is Making You An Illiterate Programmer

1:03:03