Knowledge Distillation with TAs

19:05

Distilling the Knowledge in a Neural Network

12:35

Knowledge Distillation: A Good Teacher is Patient and Consistent

8:57

Self-Training with Noisy Student (87.4% ImageNet Top-1 Accuracy!)

52:16

Language Models are Open Knowledge Graphs (Paper Explained)

18:25

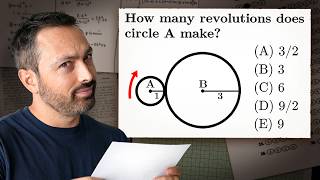

A Pergunta do SAT que Todos Erraram

17:52

Meta Pseudo Labels

27:15

O Conceito Mais Mal Compreendido da Física

9:51