xLSTM: Extended Long Short-Term Memory

37:17

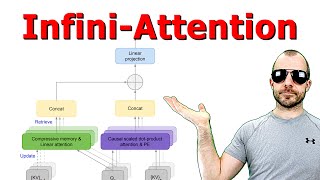

Leave No Context Behind: Efficient Infinite Context Transformers with Infini-attention

40:40

Mamba: Linear-Time Sequence Modeling with Selective State Spaces (Paper Explained)

28:23

TokenFormer: Rethinking Transformer Scaling with Tokenized Model Parameters (Paper Explained)

53:32

ImageNet Moment for Reinforcement Learning?

20:18

Why Does Diffusion Work Better than Auto-Regression?

33:37

Something Strange Happens When You Trust Quantum Mechanics

33:34

WARNING: The 12 Levels of Consciousness You NEED to Know

44:05