xLSTM: Extended Long Short-Term Memory

40:40

Mamba: Linear-Time Sequence Modeling with Selective State Spaces (Paper Explained)

37:17

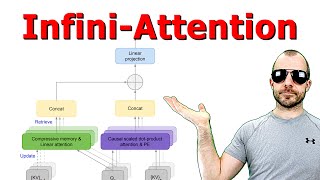

Leave No Context Behind: Efficient Infinite Context Transformers with Infini-attention

41:45

Geoff Hinton - Will Digital Intelligence Replace Biological Intelligence? | Vector's Remarkable 2024

36:15

Byte Latent Transformer: Patches Scale Better Than Tokens (Paper Explained)

14:14

XLSTM - Extended LSTMs with sLSTM and mLSTM (paper explained)

57:45

Visualizing transformers and attention | Talk for TNG Big Tech Day '24

1:39:39

Neural and Non-Neural AI, Reasoning, Transformers, and LSTMs

1:02:17