Mamba architecture intuition | Shawn's ML Notes

7:38

Sharpness-Aware Minimization (SAM) in 7 minutes

46:17

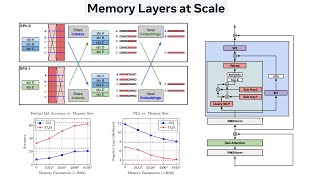

Memory Layers at Scale

37:56

Orignal transformer paper "Attention is all you need" introduced by a layman | Shawn's ML Notes

40:40

Mamba: Linear-Time Sequence Modeling with Selective State Spaces (Paper Explained)

36:03

[UPDATED] ViViT & NaViT papers: How Sora encoded space-time patches | Shawn's ML Notes

36:10

Convolutions in Image Processing | Week 1, lecture 6 | MIT 18.S191 Fall 2020

16:20

Mamba, SSMs & S4s Explained in 16 Minutes

31:51