Layer Normalization - EXPLAINED (in Transformer Neural Networks)

20:58

Blowing up the Transformer Encoder!

16:41

Simplest explanation of Layer Normalization in Transformers

5:18

What is Layer Normalization? | Deep Learning Fundamentals

29:06

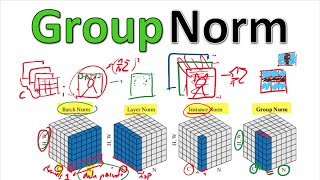

Group Normalization (Paper Explained)

31:51

MAMBA from Scratch: Neural Nets Better and Faster than Transformers

22:43

How might LLMs store facts | DL7

17:00

Batch Normalization in neural networks - EXPLAINED!

13:05