How I Finally Understood Self-Attention (With PyTorch)

24:09

The Dark Matter of AI [Mechanistic Interpretability]

17:38

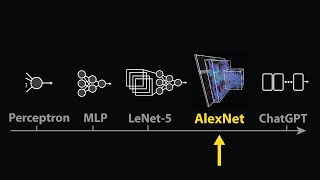

The moment we stopped understanding AI [AlexNet]

57:45

Visualizing transformers and attention | Talk for TNG Big Tech Day '24

28:02

PyTorch Lightning Tutorial - Lightweight PyTorch Wrapper For ML Researchers

31:50

Instability is All You Need: The Surprising Dynamics of Learning in Deep Models

27:14

Transformers (how LLMs work) explained visually | DL5

32:07

Fast LLM Serving with vLLM and PagedAttention

12:59