Deploy LLMs using Serverless vLLM on RunPod in 5 Minutes

21:46

Dify + Ollama: Setup and Run Open Source LLMs Locally on CPU 🔥

32:07

Fast LLM Serving with vLLM and PagedAttention

39:58

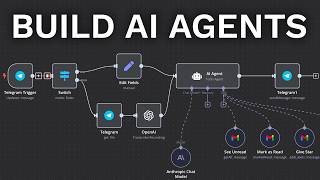

Build Everything with AI Agents: Here's How

2:42:17

Deep House Mix 2024 | Deep House, Vocal House, Nu Disco, Chillout Mix by Diamond #3

26:32

Code With AI. How to Integrate Aider with DeepSeek API to Build a Smart Contract Crawler!

23:33

vLLM: Easy, Fast, and Cheap LLM Serving for Everyone - Woosuk Kwon & Xiaoxuan Liu, UC Berkeley

18:44

Turn ANY Website into LLM Knowledge in SECONDS

5:18