BERT explained: Training, Inference, BERT vs GPT/LLamA, Fine tuning, [CLS] token

58:04

Attention is all you need (Transformer) - Model explanation (including math), Inference and Training

1:10:55

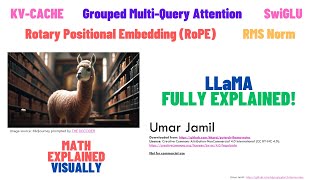

LLaMA explained: KV-Cache, Rotary Positional Embedding, RMS Norm, Grouped Query Attention, SwiGLU

23:24

Fine-Tuning BERT for Text Classification (Python Code)

28:18

Fine-tuning Large Language Models (LLMs) | w/ Example Code

1:10:21

Insights into Your NLP Engine with NLP-as & Retrieval with Haystack

27:14

Transformers (how LLMs work) explained visually | DL5

49:24

Retrieval Augmented Generation (RAG) Explained: Embedding, Sentence BERT, Vector Database (HNSW)

1:52:27