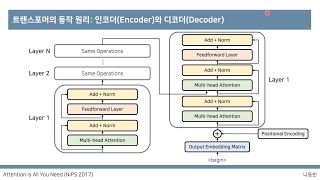

08-2: Transformer

29:04

08-3: ELMo

23:53

08-1: Seq2Seq Learning

1:28:17

[딥러닝 기계 번역] Transformer: Attention Is All You Need (꼼꼼한 딥러닝 논문 리뷰와 코드 실습)

28:29

[Paper Review] VISION TRANSFORMERS NEED REGISTERS

1:25:05

[DLD 2022] Denoising Diffusion Implicit Models

53:10

[Paper Review] Attention is All You Need (Transformer)

38:36

[DMQA Open Seminar] Transformer in Computer Vision

38:35