Will Merrill: The Illusion of State in State-Space Models

48:38

Yash Sarrof: The Expressive Capacity of State Space Models: A Formal Language Perspective

1:07:47

Mor Geva: Transformer Feed Forward Layers are Key-Value Memories, and Build Predictions

41:58

Daniel Hsu: Transformers, parallel computation and logarithmic depth

39:41

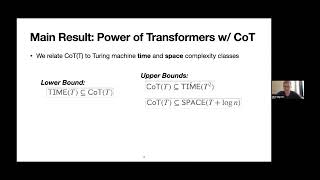

Will Merrill: The Expressive Power of Transformers with Chain of Though

45:42

Eran Malach: Universal Length Generalization with Turing Programs

45:33

Unlocking State-Tracking in Linear RNNs Through Negative Eigenvalues

55:57

Dan Friedman: Learning Transformer Programs

48:38