Mixture of Experts: Mixtral 8x7B

21:33

Scaling Synthetic Data Creation with 1,000,000,000 Personas

55:32

LLaMA: Open and Efficient Foundation Language

12:33

Mistral 8x7B Part 1- So What is a Mixture of Experts Model?

26:52

Andrew Ng Explores The Rise Of AI Agents And Agentic Reasoning | BUILD 2024 Keynote

23:43

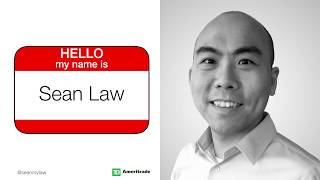

Automatically Find Patterns & Anomalies from Time Series or Sequential Data - Sean Law

22:39

Research Paper Deep Dive - The Sparsely-Gated Mixture-of-Experts (MoE)

1:30:43

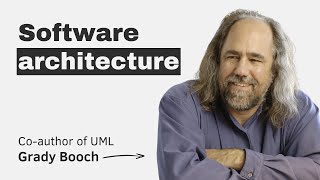

Evolution of software architecture with the co-creator of UML (Grady Booch)

44:06