Leave No Context Behind: Efficient Infinite Context Transformers with Infini-attention

57:45

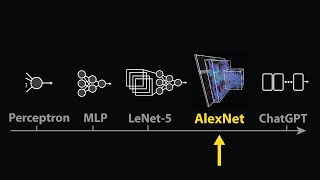

Visualizing transformers and attention | Talk for TNG Big Tech Day '24

1:02:17

RWKV: Reinventing RNNs for the Transformer Era (Paper Explained)

57:00

xLSTM: Extended Long Short-Term Memory

33:26

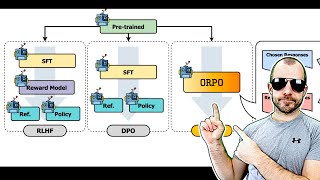

ORPO: Monolithic Preference Optimization without Reference Model (Paper Explained)

17:56

Investors Underestimate Trump's Tolerance for Stock Selloff: Soros Chief Investment Officer

26:10

Attention in transformers, step-by-step | DL6

34:32

Mixtral of Experts (Paper Explained)

17:38