Feedback Transformers: Addressing Some Limitations of Transformers with Feedback Memory (Explained)

48:12

Nyströmformer: A Nyström-Based Algorithm for Approximating Self-Attention (AI Paper Explained)

27:07

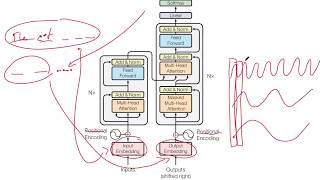

Attention Is All You Need

20:45

Long Short-Term Memory (LSTM), claramente explicado

17:19

Como seu cérebro escolhe o que lembrar

36:55

Desmistificando os Transformadores Latentes de Bytes (BLT) - Os LLMs ficaram muito mais inteligen...

51:38

Linear Transformers Are Secretly Fast Weight Memory Systems (Machine Learning Paper Explained)

45:14

DeBERTa: Decoding-enhanced BERT with Disentangled Attention (Machine Learning Paper Explained)

57:45