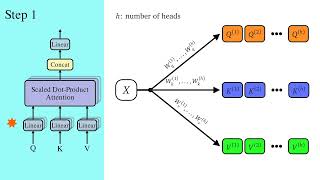

Self-Attention Using Scaled Dot-Product Approach

9:57

A Dive Into Multihead Attention, Self-Attention and Cross-Attention

26:10

Attention in transformers, step-by-step | DL6

36:16

The math behind Attention: Keys, Queries, and Values matrices

43:48

Self Attention in Transformers | Transformers in Deep Learning

19:03

Transformer Architecture | Attention is All You Need | Self-Attention in Transformers

35:08

Self-attention mechanism explained | Self-attention explained | scaled dot product attention

8:54

Germany’s Far-Right Comeback | NYT Opinion

16:09