Samuel Mueller | "PFNs: Use neural networks for 100x faster Bayesian predictions"

33:20

A friendly introduction to Deep Learning and Neural Networks

30:12

Neural Fine-Tuning Search for Few-Shot Learning

29:56

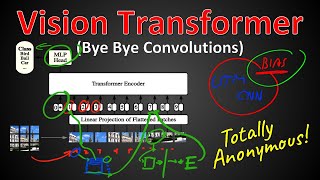

An Image is Worth 16x16 Words: Transformers for Image Recognition at Scale (Paper Explained)

40:35

Rhea Sukthanker and Samuel Dooley - Rethinking Bias Mitigation

48:45

Xingyou (Richard) Song - OmniPred: Towards Universal Regressors with Language Models

49:01

MIT Introduction to Deep Learning (2022) | 6.S191

36:15

Transformer Neural Networks, ChatGPT's foundation, Clearly Explained!!!

55:55