Rotary Positional Embeddings: Combining Absolute and Relative

7:38

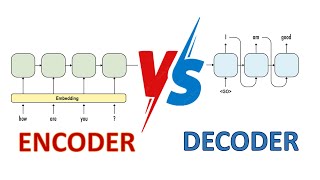

Which transformer architecture is best? Encoder-only vs Encoder-decoder vs Decoder-only models

1:10:55

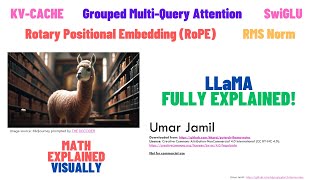

LLaMA explained: KV-Cache, Rotary Positional Embedding, RMS Norm, Grouped Query Attention, SwiGLU

13:39

How Rotary Position Embedding Supercharges Modern LLMs

48:34

Customer Journey Optimisation with Vicky Lewis

29:38

Formation LLM pour jouer aux échecs en utilisant l'apprentissage par renforcement Deepseek GRPO

8:33

The KV Cache: Memory Usage in Transformers

23:26

Rotary Position Embedding explained deeply (w/ code)

12:47