Pyspark Scenarios 11 : how to handle double delimiter or multi delimiters in pyspark #pyspark

12:32

Pyspark Scenarios 10:Why we should not use crc32 for Surrogate Keys Generation? #Pyspark #databricks

16:10

Pyspark Scenarios 13 : how to handle complex json data file in pyspark #pyspark #databricks

15:35

Pyspark Scenarios 18 : How to Handle Bad Data in pyspark dataframe using pyspark schema #pyspark

17:50

Solve using REGEXP_REPLACE and REGEXP_EXTRACT in PySpark

14:20

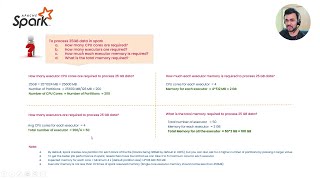

Processing 25GB of data in Spark | How many Executors and how much Memory per Executor is required.

21:57

Pyspark Scenarios 20 : difference between coalesce and repartition in pyspark #coalesce #repartition

11:26

Q11. Realtime Scenarios Interview Question | PySpark | Header in PySpark

14:36