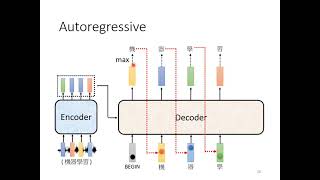

【機器學習2021】Transformer (上)

1:00:34

【機器學習2021】Transformer (下)

28:18

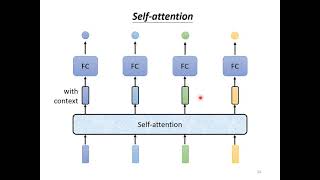

【機器學習2021】自注意力機制 (Self-attention) (上)

27:14

Transformers (how LLMs work) explained visually | DL5

1:27:05

Transformer论文逐段精读

49:32

Transformer

26:10

Attention in transformers, visually explained | DL6

1:44:31

Stanford CS229 I Machine Learning I Building Large Language Models (LLMs)

58:01