Direct Preference Optimization: Your Language Model is Secretly a Reward Model | DPO paper explained

19:39

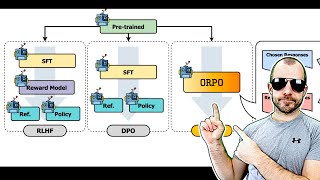

RLHF & DPO Explained (In Simple Terms!)

48:46

Direct Preference Optimization (DPO) explained: Bradley-Terry model, log probabilities, math

19:48

Transformers explained | The architecture behind LLMs

7:58

Large Language Models explained briefly

37:18

My PhD Journey in AI / ML (while doing YouTube on the side)

33:26

ORPO: Monolithic Preference Optimization without Reference Model (Paper Explained)

9:52

Training large language models to reason in a continuous latent space – COCONUT Paper explained

21:15