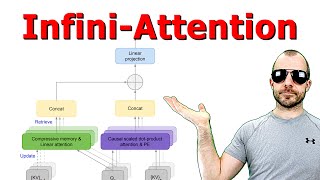

Deep dive - Better Attention layers for Transformer models

47:19

Deep Dive: Advanced distributed training with Hugging Face LLMs and AWS Trainium

38:47

Deep Dive: Compiling deep learning models, from XLA to PyTorch 2

36:12

Deep Dive: Optimizing LLM inference

1:09:48

Building Lovable: $10M ARR in 60 days with 15 people | Anton Osika (CEO and co-founder)

57:45

Visualizing transformers and attention | Talk for TNG Big Tech Day '24

42:04

Inferencia basada únicamente en decodificador: una inmersión profunda paso a paso

37:17

Leave No Context Behind: Efficient Infinite Context Transformers with Infini-attention

45:19