Master Multi-headed attention in Transformers | Part 6

25:54

Positional Encoding in Transformers | Deep Learning

26:10

Attention in transformers, step-by-step | DL6

43:48

Self Attention in Transformers | Transformers in Deep Learning

11:25

A visual guide to Bayesian thinking

20:11

Why Scaling by the Square Root of Dimensions Matters in Attention | Transformers in Deep Learning

21:09

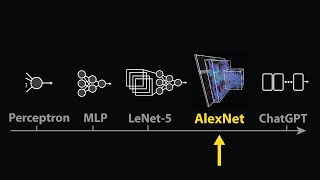

Transformers in Deep Learning | Introduction to Transformers

57:45

Visualizing transformers and attention | Talk for TNG Big Tech Day '24

17:38