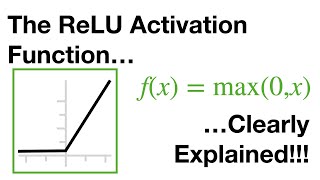

L13- Activation Functions (Relu | Dying Relu& Gradient Exploding)

32:56

L14- numpy, TensorFlow, Keras, ScikitLearn

59:41

L12- Activation Functions (Sigmoid & Tanh | Saturation & Gradient Vanishing)

8:58

Neural Networks Pt. 3: ReLU In Action!!!

16:20

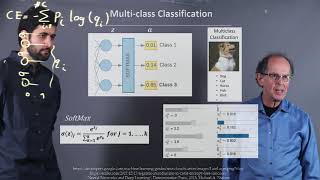

Confusion Matrix وكيفية الإنتقال إلى Softmaxشرح بسيـــط حول مفهوم دالة

29:31

Advanced Deep Learning: Gradient Descent (Kurdish Language)

22:21

Faster Optimization Techniques

8:15

Categorical Cross - Entropy Loss Softmax

30:20