Flash Attention Explained

57:45

Visualizing transformers and attention | Talk for TNG Big Tech Day '24

27:14

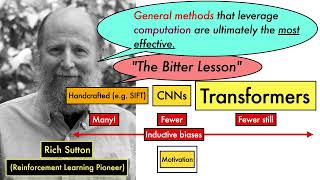

Transformers (how LLMs work) explained visually | DL5

58:58

FlashAttention - Tri Dao | Stanford MLSys #67

58:06

Stanford Webinar - Large Language Models Get the Hype, but Compound Systems Are the Future of AI

27:07

Attention Is All You Need

1:00:00

Speculative RAG: Enhancing Retrieval Augmented Generation through Drafting Explained

30:49

Vision Transformer Basics

11:54