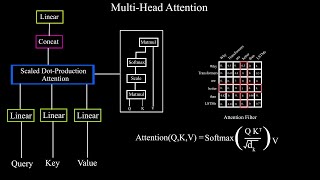

A Dive Into Multihead Attention, Self-Attention and Cross-Attention

8:11

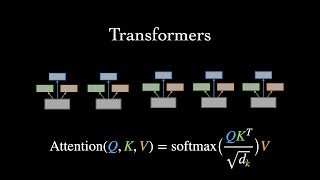

Transformer Architecture

16:44

What are Transformer Neural Networks?

11:55

Attention is all you need || Transformers Explained || Quick Explained

58:04

Attention is all you need (Transformer) - Model explanation (including math), Inference and Training

16:09

Self-Attention Using Scaled Dot-Product Approach

15:25

Visual Guide to Transformer Neural Networks - (Episode 2) Multi-Head & Self-Attention

12:32

Self Attention with torch.nn.MultiheadAttention Module

26:10